Palette Application

Initially conceived of as a "Tinder for Art," Palette was designed to match clients with art advisors.

Early testing showed that a "like" "dislike" system was ineffective in predicting aesthetic taste.

Later we found that notoriety of a person's three favorite artists was a strong predictor—particularly when paired with a set of images rated from 1-4 stars (dislike to like).

Role: Design Lead | Time: January - March, 2016

Product Development Phases

V1

V2

V3

V4

Detailed Process Overview

THE PROBLEM

Art experts are often highly specialized. Similarly, client’s often have fairly specific aesthetic tastes. One of the greatest value propositions of our platform was matching a client’s taste and an advisor’s area of expertise. An expert in early 18th century French painting would be a poor fit for a client interested in mid 20th century abstract expressionism, for example.

We quickly discovered that our target consumer often lacked the knowledge or vocabulary to quickly confer his or her aesthetic tastes, creating a significant match-making problem.

DESIRED DELIVERABLES

In response to this problem, I set about designing a product with two goals in mind:

Improved Advisor Matching

Determine client’s aesthetic tastes and acquisition goals

Match with appropriate acquisition advisor based on a) location b) expertise

Speed Up Initial Consultation

Communicate major taste/preference trends to art expert to create starting point for initial consultation

USER TESTING: EARLY AND OFTEN

Before digging into early ideation and wire-framing, I wanted to test a few hypotheses I had about our consumers. Using a set of 20 printed art images, I interviewed 5 potential users with a broad range of art-world familiarity. My goal was to assess what types of questions (and which images) generated the best profile on a consumer’s tastes.

In each case, I tested the consumer taste profile that I had developed by showing the interviewee artworks that I felt they should be positively predisposed to, based on my findings.

INITIAL FINDINGS & CHALLENGES

Breadth of Aesthetics: consumers exhibited a very broad range of aesthetic tastes and conceptions of"fine art"

Limited Vocabulary: users often lacked the core vocabulary, experience or confidence to verbally describe their tastes beyond “liking” or “disliking” a particular artwork

Varying Exposure: a consumer’s level of art world exposure was often one of the best indicators of what “genres” of art he or she would be attracted to

Acutely Self-Conscious: One surprising finding was how acutely self-conscious individuals with limited art backgrounds were. They often exhibited discomfort when asked to go beyond “like” or “dislike” and almost always prefaced answers with “I don’t know anything about art, but…”

Fun Fact: Throughout my research process I found that individuals with limited art exposure tended to be drawn to highly figurative works, or works that clearly involved a significant amount of time and technical skill. Individuals with broader exposure were more frequently pre-disposed to minimalist artworks.

EARLY IDEATION & INFLUENCES

Based upon initial user interviews—and in deference to users with limited art exposure—I decided to keep our initial product purely visual and to leave out any potentially alienating questions or jargon.

Based on this decision, I looked to BuzzFeed “hot-or-not”quizzes and Tinder for early product inspiration.

INTITIAL BETA: "Tinder for Art"

The initial prototype was conceived of as a "Tinder for Art" with left/right swipe functionality to quickly collect user feedback on as many images as possible. I assumed that by keeping the functionality extremely minimalistic, users would provide enough data to allow me to make inferences on their aesthetic tastes.

I quickly created a rough visual prototype using Proto.io (featured) but to streamline testing opted to simply create a quick “like” or “dislike” google survey.

BETA RESULTS

As in my initial user research, I created a taste profile from survey responses from a set of betatesters. In each case, I emailed the user a set of artworks that I thought they would be attracted to based on the results.

Early testing showed a significantly lower success rate than the more organic, question-focused interviews.

ADDITIONAL RESEARCH

Returning to the drawing board, I looked for inspiration from other consumer product surveys and matching applications. In particular, I examined OkCupid’s approach to asking questions and weighting responses, StichFix’s image-comparison style quiz and Homepolish’s integration of questions and image surveys.

Based on this research, I conducted three quick user tests:

ONE: IMAGE COMPARISON

To better identify which features in each image users were attracted to, focused on image comparison.

Each image pair was elected to juxtapose a specific aesthetic trait or (example: abstract vs. figurative).

RESULTS: Image comparisons gave better results but choices were often misleading. A comparison of an abstract Pollock painting and a figurative Sargent painting (featured) was meant to broadly test whether users were more drawn to figuration or abstraction, but often lead to false positives—particularly if the users was already familiar with either of the artworks.

In post-survey interviews, some users also noted that problematically there was no option to express interest in both figuration and abstraction or an intermediate.

Two: Integrating Questions

Going back to basics, I created a survey asked users about their aesthetic tastes and interests through multiple choice, long-form and short-form questions.

RESULTS: In post-survey interviews, users with little or no previous art background expressed significant dissatisfaction and/or intimidation with the long form questions. I also discovered that one of the best and most efficient indicators for preference was to ask users to list their three favorite visual artists.

Conveniently, this question also provided significant insight into a user’s art exposure. User’s with little art exposure often cited household names (Warhol, Picasso, Van Gogh),while users with greater art exposure cited increasingly obscure names (Duchamp, Hannah Hoch, El Lizitsky).

Three: Rating System

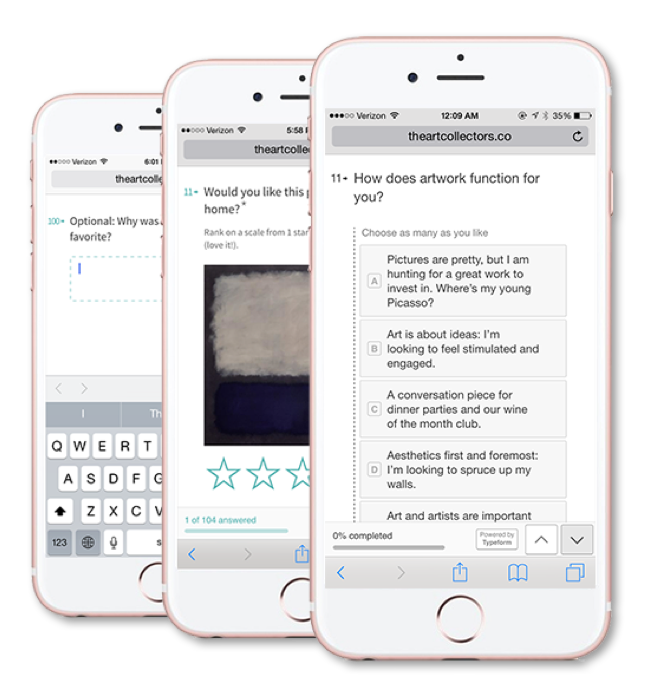

Returning to the "Hot-or-Not" methodology, I added a ranking system. Users ranked each image from 1-5 stars (“dislike” to “like”).

RESULTS: In conjunction with the list of favorite artists, the ranking system resulted in significantly better predictions of which artist/artwork a user might be drawn to.

Subsequent tests found that a 1-4 star rating provided best results, forcing users to choose a preference and eliminating neutral responses.

USER TESTING SUMMARY

I conducted lean one-on-one user testing to identity the best mix of mediums to quickly identify core user tastes.

DISCOVERIES:

User provided list of"favorite artists" served as quickest and most accurate method of identifying art know-how

Supplemental long-form questions (e.g. "why did you dislike this work") significantly increased survey time and decreased user satisfaction without significantly improving matching outcomes

Hot-or-Not style survey with users rating individual works maximized feedback while minimizing user time

A rating system from 1-4 optimized user feedback

USER TESTING SUMMARY

We launched a version of the product featuring the 4-star rating system and favorite artist question as part of our online sign-up process. Notably, the initial product required manual review and match-making and lacked any features to communicate a consumer’s aesthetic tastes to his or her assigned art expert.

POST-LAUNCH FEATURE CHANGES

A later beta version of the product attempted to use decision trees to increase the quality of data collected while minimizing time. The decision tree beta was ultimately abandoned due to time constraints.

To address the second priority—speeding up initial consultations—a feature was added later on to export survey results into a formatted PDF document complete with artwork images and ratings. Though very basic, the art experts were able to make strong inferences based on this simple solution.